This article is an attempt to organize my thoughts on the open letter issued by well over a thousand high profile IT pioneers and AI luminaries including Elon Musk, Steve Wozniak, Yoshua Bengio(Founder and Scientific Director at Mila and a Turing Prize winner), Stuart Russell (Berkeley, Professor of Computer Science, director of the Center for Intelligent Systems), and hundreds of other individuals of note:

Pause Giant AI Experiments: An Open Letter

First, I want to make it clear, it doesn’t matter what I think. Like his therapist said of Zaphod Beeblebrox, I’m “just this guy, you know?”

That said, I am dealing with this professionally and personally, and this is as good a place as any for me to put my thoughts.

It is my belief that sentience is a red herring when it comes to AI. It is irrelevant to the equation. What matters is what is the AI connected to in the physical world. Sentient or not, if an AI that trades stocks decides that crashing the market is a good play, it can use its outputs to make that happen. No sentience necessary. If an AI decides that it needs more computing power for a problem and it has access to the internet, what is to stop it using thousands of free AWS accounts to build small pieces of a larger system. Once it has this additional compute power that its owners are unaware of, who is to stop it doing things it deems “for our good”, or “logical”, or even “ethical”?

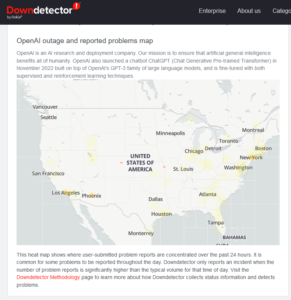

At the moment, I can see enough limitations in the existing AI models (currently ChatGPT-4, Google Bard, Microsoft Bing AI) that I don’t think that this is going to be a problem yet.

Yet.

The difference in power between GPT-3.5 and GPT-4 are palpable. They say that it is more restrained and under control. I say prove it, but they can’t. Sure it may not be as easy to jailbreak, but there are too many scenarios where the true nature of what is going on will be transparent or hidden for it to be certain in any way. If GPT-5 is to be as big a leap over GPT-4 as 4 was over 3.5, then it is probable we will have something altogether different to deal with.

So should we stop testing and moving forward?

Unfortunately now that the horse is out of the barn, I don’t see that being an option.

Take for instance viral research. Many countries have put limitations on what can be done. No “gain of function” research is allowed. Yet other countries see this as their opportunity to make ground, and will not only do this type of research for their own purposes, but will rent out their labs and personnel to countries that have banned it so they can circumvent those limitations. This happens in all kinds of industries, and I do not see it happening any other way should AI be constrained.

in 2017 Vladimir Putin said that “Whoever becomes the leader in this sphere[AI] will become the ruler of the world.” I agree with this sentiment. The space race for AI has already begun. Do you not think that China, Russia, India, and European countries are dumping huge resources into their own AI research? I think it is also safe to say that what we are seeing is also several generations behind what is currently being utilized behind locked doors in government and military labs where the imperative is pressing, real, and militarized.

Add that to the list when looking at the militaristic ramping up all over the world, and it is almost impossible to think that the real AIs of concern will be constrained in any meaningful way. To the contrary, more funds and resources will be dumped into them and they will become even more impressive.

In short, we looked into the box and Schrodinger’s cat jumped out and bit us.

As I write this, because of my use of AI recently, I am very tempted to run this article through an AI to clean it up. Maybe add some flavor, or help expand it a bit. But what my experience has told me is that due to it being about AI, the AI it will soften it and try to reduce the impact, while giving a standard “we need to be aware of the impacts of AI. Blah, blah, blah.” I don’t care if that is the AI or the programmers doing that, it is somewhat chilling in context.

AI is a tool. Like any tool it can be used for good or ill. The difference is that when you swing a hammer, you can predict with reasonable certainty where it will go, what it will do, and what the impact will be. Any error in that is really user error. With AI we can no longer assume that. A perfectly rational and innocent input can have unintended consequences. Enough people have been working to jailbreak AI that it has proven that the walls around it are really three foot chain link fences. If someone wants to mess with it, they will. And they have.

It puts us in this weird catch 22 where we can’t stop developing AI, because it will put us behind in the race. We also can’t keep going forward the way we are without something catastrophic happening. This is really very much like the nuclear arms race, but in some ways much scarier.

Here is my hope. I hope there is a contingency on the part of the government or the power utilities to be able to shut down the grid manually should the need arise. This will throw us back to the 1800’s technologically, but it may be the only way to address an AI gone awry. And if we have to resort to those measures, there will be no quick resolution. It could be years, even decades before we are able to recover from that type of event.

What would your world look like without power for an extended period of time?

More to the point, how would your neighbors cope?

I recognize that the tone of this post is kind of all over the place, but so are my opinions right now. I don’t have any answers, but I do feel it is important everyone that can get educated and understand what is going on, because the world is changing even more than we currently think it is. If we don’t think of what the implications are for ourselves, we will get run over. The best we can do is try to learn and keep our wits about us as things change, and hope that those who have the ability to make large scale changes do so with wisdom and not hubris.